Abstract

Whether it be driving or cooking, the need for a method to play mobile games in hands-busy situations is growing.

The best way would be a speech based user interface. While deep learning based on-device automatic speech recognition methods are improving at an impressive rate, such methodology has not been explicitly applied to mobile gaming.

The biggest problem of ASR in mobile gaming is that users need an immediate response. This requires real time speed on the computational power and storage limited to a mobile phone. Most solutions overcome this problem by sending the voice input to a cloud server where a bigger computational power calculates the result. However, this is not practical for a gamer due to slower responses and additional network usage. Is it possible to make a model small enough so that the model can be inserted into the game itself? Is there a way to apply an on-device solution for mobile gaming?

To solve such issues, we created a transformer based model named MONICA. It retains minimal recognition accuracy degradation compare to the state of the art ASR methods, yet only uses 10% of the network parameters and nearly 5 times faster on a typical mobile phone. With MONICA, an on-device approach to voice commands recognition in gaming is possible. This demonstration will show a web based interactive performance of MONICA as a voice interface to an online chess game and the showcase of MONICA being integrated into A3: Still Alive, a Massively Multiplayer Online Role-Playing Game (MMORPG) released and serviced by Netmarble of South Korea.

How it works

Recently ALBERT was introduced to reduce memory consumption and speed up training time for language representation tasks. Following the idea of cross-layer parameter sharing proposed in ALBERT, MONICA shares both feed-forward network and attention parameters across all layers for the transformer encoders of the acoustic model. We choose TensorFlow Lite from Google’s Firebase SDK as our inference engine for its optimized operations designed for mobile devices.

We perform experiments on the Librispeech corpus to evaluate the effectiveness of MONICA compare to Speech-Transformer. To further reduce network memory, we modified two parameters in the encoders: both convolutional filter size and attention size was changed from 512 to 256. For a fair comparison, all hyper parameters of the two models are set to be the same. Both models are trained with 168 epochs, batch size of 32, word-piece size of 2500 and 12 encoder layers with 2048 units.

Size and Performance

The performance of MONICA and Speech Transformer is measured by word error rate (WER). As we can see from Table 1, MONICA shows about 4% WER degradation in both clean and noisy test dataset despite having only 10% parameters compare to Speech-Transformer.

We measure both memory usage and inference time in Samsung Galaxy S8. MONICA only costs 27MB memory with dynamic 8-bit quantization provided by Tensorflow Lite. Speech-Transformer needs at least 199MB, which is relative too big to be integrated into mobile games even with 8-bit quantization. In Galaxy S8 the average inference time of MONICA is 263ms for each 3-second voice command. This is more than 5 times faster than Speech-Transformer.

Model in action

Here is the demonstration video of integrating MONICA into A3: Still Alive, a dark fantasy MMORPG serviced by Netmarble in South Korea. Since A3 is on the service in South Korea only for now, all the voice commands tested in the video are spoken in Korean and we added the text scripts of voice commands.

Try it out

-

- Select model

- Chess

-

Sample commands

- A2 (to) A4

- move Knight to A3

- Pawn capture A3

- Castle Kingside

- Undo my last move

- Start a new game

- Load the transformer Model

Recognition result

- None

- Input Audio Length : 0ms

- Recognition Latency: 0ms

- Test Tips!

-

Check your microphone and browsing environments.

Becouse of tensorflowjs, we recommend testing on Chrome desktop browser with MIC.

-

Speech commands naturally.

Our models were trained with natural reading corpus, Librispeech. So, making humming, speaking slowly can make "miscommands".

Test App

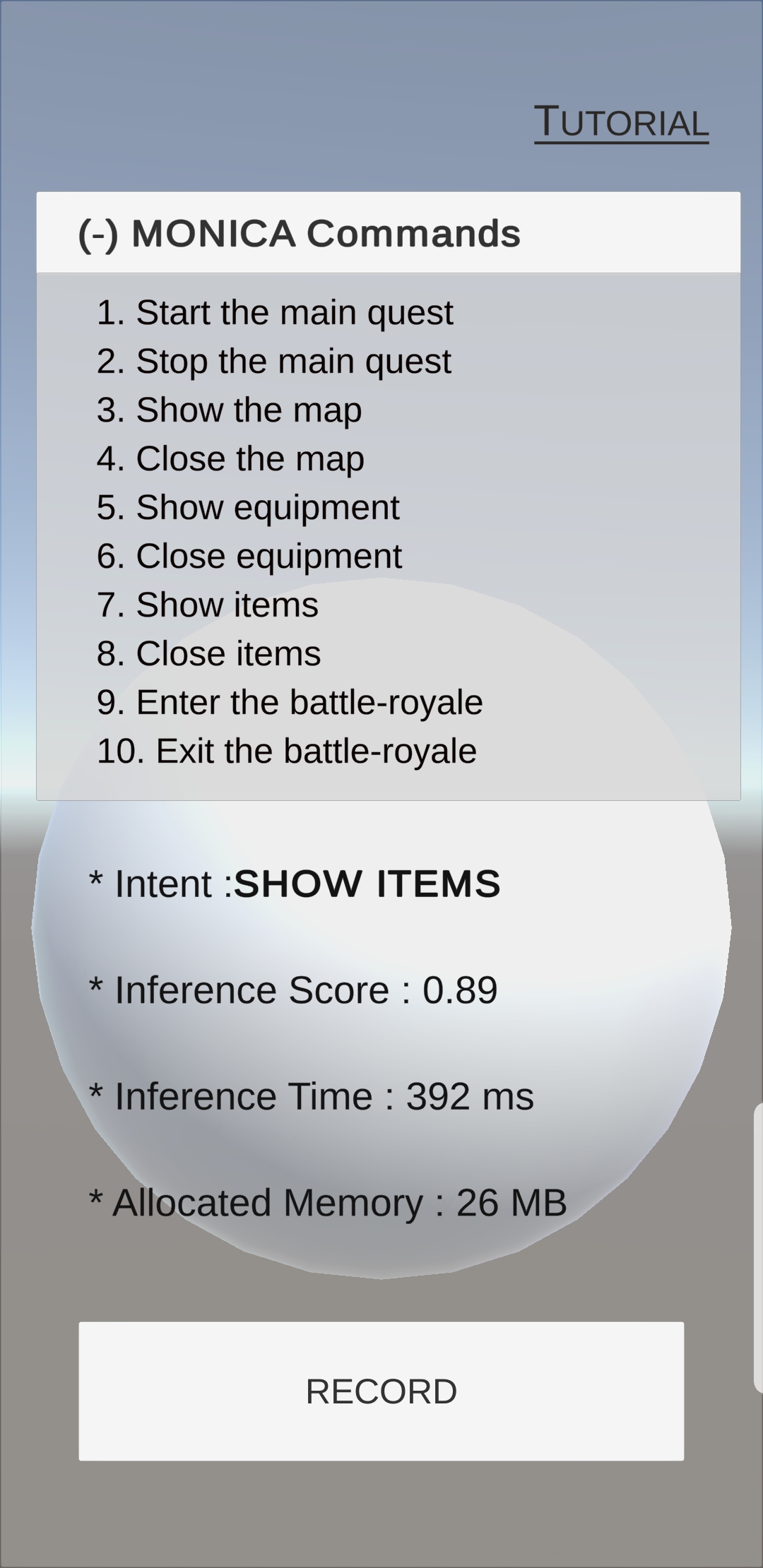

We provide a mobile application, so that any one could test the effectiveness of MONICA. After installation, press the Record button, then test any A3 voice commands listed on our demonstration web page. When MONICA correctly recognize a voice command, the ball in the center will turn green. The inference score, processing time and memory usage is shown to confirm the effectiveness of MONICA. Only an Android test application is provided, while an iOS counterpart will be released soon.

-

[Pic.1] Android Application Screenshot. -

MONICA Commands List

- Start The Main Quest

- Stop The Main Quest

- Show The Map

- Close The Map

- Show equipment

- Close equipment

- Show items

- Close items

- Enter the battle-royale

- Exit the battle-royale

Monitoring Results

- Intent

: recognized commands. - Inference Score

: similarity score between speech and commands. -

Processing Time

all of the times, included feature extraction, asr and commands classification. -

Memory Usage

: calcuaged memory with Unity.Profiler ADDITIONAL INFORMATION

- Requires Android : 5.0 and up

- Permissions : Microphone for recording

- Recommand Devices : later Galaxy series than "Samsung Galaxy s8"

- Download App

Contact us

Do you have any questions? Please do not hesitate to contact us directly. Our team will come back to you within a matter of hours to help you.

E-mail1 youshin.lim@netmarble.com

E-mail2 yhong@netmarble.com

E-mail3 ethan.an@netmarble.com